Published on Jun 30, 2025

Expert-Led LiDAR Annotation Pipeline for Real-World Autonomy

At Labelbees, we’ve engineered a precision-focused annotation pipeline to support LiDAR perception tasks in autonomous vehicles. Built with enterprise-grade quality control and human-in-the-loop validation, the pipeline was tested using publicly available datasets and is designed to integrate with both open-source and proprietary workflows.

This work demonstrates our ability to deliver scalable, expert-led annotations that meet the demands of real-world autonomy systems across 3D spatial data, sensor fusion, and time-series consistency.

Why We Built It: From Concept to Capability

Modern autonomous vehicles must make split-second decisions in complex, dynamic environments. Perception accuracy isn’t just a technical challenge, but it’s a safety imperative. Off-the-shelf tools and generic workflows often fall short when applied to high-density 3D LiDAR data at scale.

That’s why we engineered a custom annotation pipeline, purpose-built for autonomous perception systems, that embeds domain expertise, human-in-the-loop oversight, and scalable QA mechanisms from the start.

Our objectives were clear:

- Develop a flexible, ontology-driven framework tailored for 3D spatial understanding and AV-specific semantics.

- Ensure precision and temporal consistency in cuboid annotations across large-scale LiDAR sequences.

- Accelerate throughput with intelligent task routing and expert validation without compromising on quality.

- Support downstream AV tasks, including multi-object tracking, motion forecasting, and sensor fusion alignment.

Tackling Real-World Complexity with Open-Source LiDAR

To validate our pipeline under real-world conditions, we worked with open-source LiDAR datasets that reflect the operational complexity of production-grade AV systems. These environments revealed the kinds of edge cases that enterprise AV teams routinely face and that our expert workflows are designed to handle.

Here’s how we addressed some of the most pressing challenges:

- Varying Point Density: Sparse point clouds, primarily from distant objects, required advanced interpolation and data fitting techniques to ensure accurate 3D reconstructions.

- Cluttered Urban Scenes: In complex environments with occlusions, overlapping actors, and structural noise, we implemented robust annotation logic to ensure every relevant object was precisely labeled.

- Temporal Coherence: Multi-frame sequences demanded a high level of consistency. Our system ensured dynamic cuboid alignment and ID tracking across frames, even with challenging environmental changes.

- High Annotation Fidelity: Modern AI models require more than basic object bounding boxes. We delivered enriched annotations that provided the structure, semantics, and contextual relationships necessary for advanced perception tasks.

Our Solution: Purpose-Built, Ontology-Driven, Expert-Guided

To solve the complex demands of 3D LiDAR annotation for autonomous systems, we developed a tailored pipeline that fuses deep domain expertise with intelligent workflows, ensuring accuracy, consistency, and scalability from day one.

Key components of our solution include:

- Ontology-Driven Framework: We designed an internal, extensible taxonomy specifically for AV perception capable of capturing diverse traffic actors, scene dynamics, and edge-case scenarios with precision.

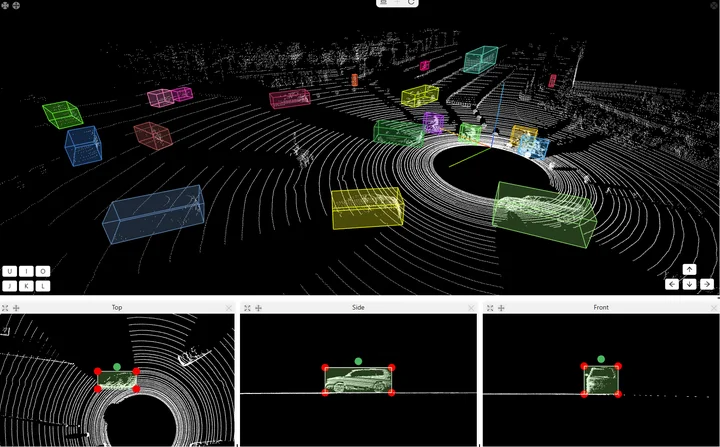

- High-Fidelity 3D Cuboids: Every object was annotated with tightly fitted 3D cuboids, capturing location, orientation, and physical dimensions critical to real-world sensor interpretation.

- AI-Augmented Expert Input: Our hybrid workflow paired expert annotators with AI-assisted keyframe interpolation, accelerating output while preserving temporal coherence across frames.

- Context-Aware Metadata: We went beyond object detection to label occlusion levels, truncation, and scene context, enabling downstream tasks like behavior prediction and trajectory modeling.

- Rare & Ambiguous Class Handling: Edge cases were not ignored. They were carefully identified, labeled, and documented to improve model generalization and reduce blind spots.

- Multi-Layered Quality Assurance: Our validation pipeline includes automated checks, ontology alignment, and expert review, ensuring enterprise-grade reliability and auditability.

What We Delivered

After rigorous testing on multiple open-source LiDAR datasets, our annotation pipeline demonstrated standout results:

- 30% faster annotation cycles: driven by automation and streamlined workflows.

- Consistent, frame-aligned labels: supporting temporal perception tasks like tracking and motion forecasting.

- Scalable throughput: capable of processing enterprise-scale 3D datasets without sacrificing quality.

- Alignment with production-grade AV requirements: from object detection to trajectory prediction and sensor fusion.

An annotated LiDAR point cloud from our expert-led pipeline, showcasing high-precision 3D cuboids with temporal and semantic consistency.

Why It Matters: The Business Impact

Our in-house solution is not only a technical asset but also a force multiplier for building autonomous systems, robotic platforms, and next-generation AI products.

- Accelerates AI development: Structured, high-fidelity annotations reduce model training cycles, shorten iteration loops, and speed up deployment cycles.

- Low cost and operational complexity: High-quality data reduces the need for relabeling efforts, minimizes debugging time, and de-risks model failures in the downstream systems.

- Enables sensor fusion: Accurate 3D ground truth data provides a backbone for fusing LiDAR, radar, and camera streams in advanced perception stacks.

- Supports regulatory safety and validation: Rich metadata and semantic precision facilitate scenario-based validation and compliance with evolving safety standards.

- Built to scale: Whether you're building AV fleets, scaling ADAS systems, or testing robotics systems at production scale, our infrastructure supports high-throughput expert input you can trust.

Powering What’s Next in Autonomy

At Labelbees, we’ve developed a robust, scalable annotation solution purpose-built for autonomy, powered by internal workflows, expert input, and a commitment to precision. Our approach demonstrates that high-performance LiDAR annotation doesn’t require off-the-shelf shortcuts, but rather demands a deep understanding of the domain and deliberate engineering.

We’re proud to support the teams shaping the future of intelligent mobility, robotics, and defense.

If your AI systems rely on 3D perception, we’re ready to help. Let’s make your models smarter, faster, and safer, with expert-validated data built for real-world complexity.